The difference between scenario-based and contextual chatbots

Scenario-based chatbots consist of predefined, structured conversations based on specific scenarios or use cases. In these interactions, the chatbot provides predetermined questions and follows the previously created script to guide the conversation.

On the other hand, contextual chatbots are more intuitive. They use advanced large language models to understand search intent and engage customers in more natural, everyday conversations. Most importantly, they keep learning from each interaction.

Creating a new app. Integrating new functionalities into an existing one. All you need is one API.

Whether you want your chatbot to be a separate platform or integrate it with your existing app or website, we can help you either way.

Additionally, we can create plugins for your chatbot, to meet your specific requirements. Want to create a chatbot that “understands” images, for your retail business? No problem. We will create a chatbot that allows your customers to upload images of their desired products and recommends similar ones in your store. Drive success with us as your chatbot software co-creator.

Deliver innovation with LLM contextual chatbots

Created by humans – for humans. An LLM-powered chatbot automates most of your daily tasks, irrespective of your industry or business size.

Performing searches using simple, everyday language. The processes that once required months of learning complex software or hours of manual tasks can now be taken care of in a flash with smart search.

Making the conversation flow more intuitive. Understanding the search intent. Performing rapid, more meaningful searches. This is what you get by developing a contextual chatbot interface.

Powered by LLM to redefine how users communicate with the search. Those powerful AI models are trained on vast amounts of textual data to understand and generate human-like language.

Why you should choose a custom chatbot solution based on an already proven accelerator

At Vega IT, we are striving to understand your specific values, pain points, and objectives. Based on them, we develop innovative software solutions, tailored specifically for you. On the other hand, we use our chatbot development accelerator to make the process as simple as possible. That means you get the best of both worlds – a fast-developed software solution, designed to meet your specific requirements.

Data security

Out-of-the-box chatbot APIs do offer some basic security measures but may have limitations in terms of adapting to your specific security needs. That is the main concern of any business collecting sensitive information, especially those in highly regulated sectors such as healthcare or finance.

Our accelerated chatbot solutions prioritize data security, allowing you to implement specific security measures based on your project requirements. For example, with a cutting-edge chatbot based on our software accelerator and hosted on your in-house servers, you get full control over your data.

Cost-effectiveness

Tried and tested. Quick implementation. Scalable and adapted to your industry and needs. We don’t build your desired software from scratch. Instead, we have developed a readily available software development solution that only needs to be tweaked to meet your specific requirements. Enter chatbot accelerator. That is exactly what makes our chatbot development process client-friendly and affordable.

Setting constraints and implementing new functionalities

To work properly, LLM-powered chatbots need precise context. In other words, their answers need to be shaped and tailored to the specific needs of your business and industry. For example, if you are an online fashion retailer, you don’t want users to discuss the latest insurance trends with your chatbot.

Keeping interactions on-topic and meaningful. Providing straightforward answers. Ensuring natural conversation flow. That’s what we aim to achieve with AI chatbots built with our software development accelerator. We don’t create generic software solutions, such as ChatGPT, which cover a range of topics, from fashion to finance. Instead, we focus on developing industry-specific chatbots that cater to your exact business requirements.

Chatbots across industries

Intelligent chatbots and searches can automate your daily tasks, enhance others, and create new opportunities for innovation. They benefit a range of industries, including:

Why choose a chatbot software development partner?

At Vega IT, we are at our best when matching your goals and ambitions. We want to learn about your specific requirements, desires, and pain points to create a software solution that is fully tailored to you.

Plus, we know the fastest way to create your ideal chatbot software. We’ve squeezed 15 years of our tech expertise and domain knowledge to create a chatbot accelerator. Developing custom software for you without re-creating the wheel. That means that, based on our previous use cases, we have designed an end-to-end solution that allows us to create software for you – faster and at a lower cost.

Everything we build with you is fit for purpose and ready to create lasting value for your business.

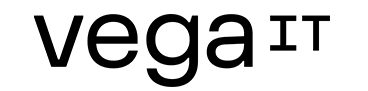

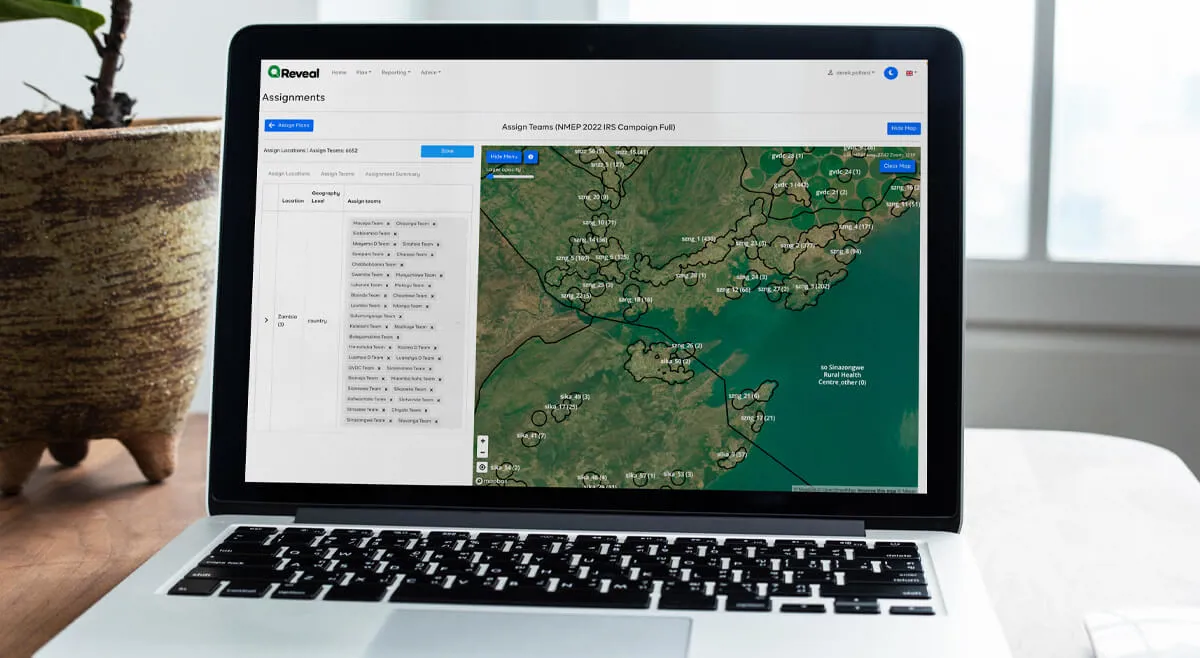

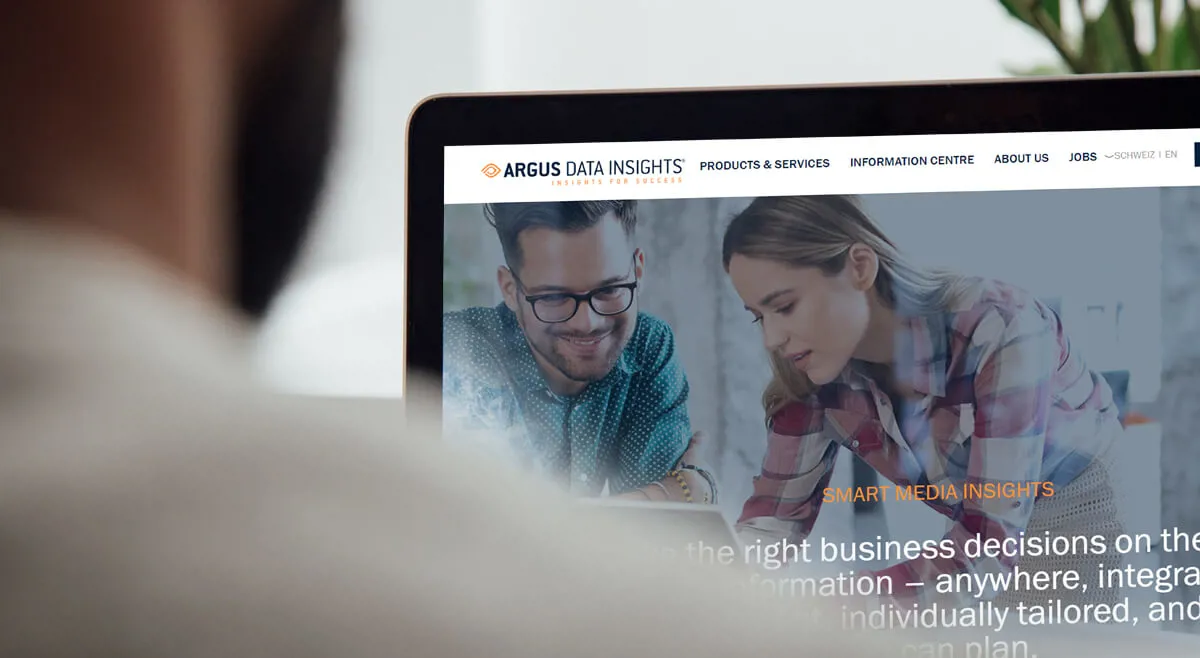

What we have done for our customers

Our workChatbot hosting options

When it comes to your custom LLM chatbot hosting, there are three options:

World-class companies that trust us:

Which business model suits you?

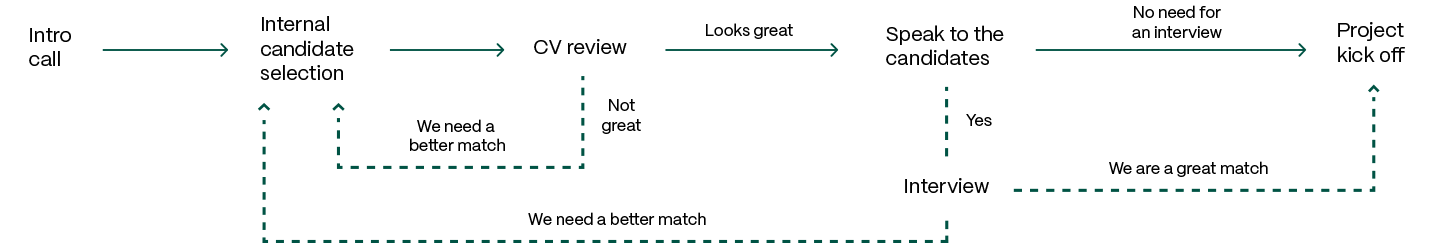

Different budgets, deadlines, challenges, and requirements. There is no one-size-fits-all approach to software development. To match your exact goals and ambitions, we offer two types of business models:

- Time & material: Greater control. Flexibility. Participation in candidate selection. With no rigid processes or end dates, this business model is easier to scale up or down as your business needs change.

- Fixed price: Fixed scope. Fixed budget. Fixed timeline. Those are the main benefits of the fixed price model. You set the requirements upfront, and we deliver the project within them.

Many clients choose to start with the fixed-price model. However, as their project scope evolves, they typically shift to the time & material model.

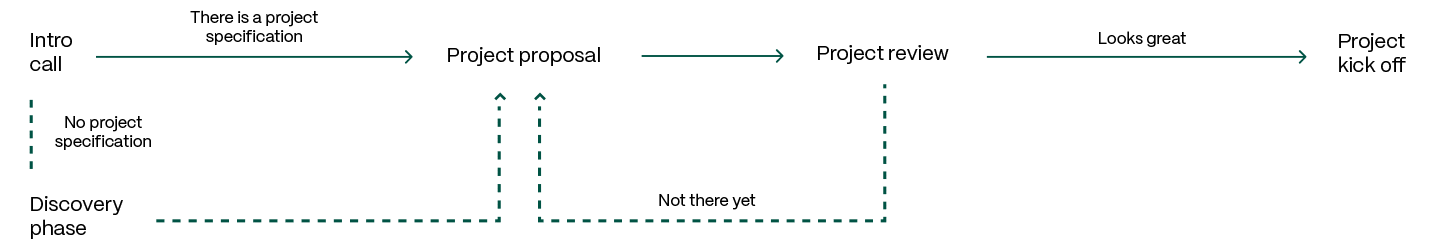

How to get started with chatbot development?

There is no one-size-fits-all solution when it comes to chatbot development. The methodologies we use and the tech stack we choose depend on your industry, business needs, and specific project requirements. You tell us what your chatbot’s purpose is. We discuss your demands with our advisory team and business analysts to create the best plan for software development and implementation.

Your ideas. Our expertise. Let’s build the world you imagine – together.

Book a call

Strength in numbers

Our tech stack: designed to work with yours

-

.NET

-

.NET Core

-

Java

-

Node.js

-

Python

-

PHP

-

React

-

Angular

-

Vue.js

-

HTML

-

CSS

-

React native

-

Flutter

-

Kotlin

-

Java for Android

-

Swift

-

Objective C

-

Azure

-

Amazon AWS

Chatbot Software Delivery - FAQ

Software development accelerators are end-to-end software development components that software development companies use to build chatbot solutions for clients faster and at a more affordable price.

There is no one-size-fits-all solution to chatbot creation. It depends on a range of factors, including the chatbot type, your industry, hosting providers, and so forth. At Vega IT, we first take time to get to know your business and, based on what we learn, create the solution that works best for you.

Investing in chatbot APIs may seem cheaper at first glance. However, that is not necessarily so. Usually, an out-of-the-box chatbot API comes at a lower initial cost since you’re using existing technology and infrastructure. For instance, for GPT-4, the expense is $0.03 for 1000 prompt tokens. However, that’s how much you will spend for a single request. Consider that, if the request is complex, it may require multiple prompts towards both the GPT-4 API and third-party services. That means your chatbot costs may reach several thousands of dollars per month.

With chatbot solutions based on a software accelerator, you have higher initial costs due to custom development. However, those are one-time expenses that drive long-term success. Once your chatbot is ready, you only pay for the hosting option you choose (a third-party provider or an in-house solution).

The benefits of smart search and intelligent chatbots are multiple for businesses across various industries. They simplify a range of internal business operations, from data entry and analysis and report creation to fraud detection and prevention. Chatbots can also enhance user experiences and inspire customer conversions and loyalty through a range of tactics, from personalized marketing to real-time, 24/7 support.

Some examples of large language models are GPT-3.5, GPT-4, LlaMA, Alpaca, Falcon, LaMDA, NeMO LLM, etc. Each belongs to a different family of LLM models. While all of these models are based on transformer architecture, they vary in the data set on which they are trained. That is precisely why their performance in executing various NLP tasks is never the same. Therefore, we need to be careful when selecting the model to achieve an optimal fit in terms of cost and performance.

Additionally, LLM models can have different sizes. As a rule of thumb, the larger the model, the greater its capacity. For example, Falcon has 180B, 40B, 7.5B i 1.3B models, while LlaMA has 7B, 13B, and 70B models. Of course, the selection of the right LLM size depends on task complexity and impacts resource management.

Usually, using small or medium-sized LLM models is an optimal choice. On the other hand, large LLM models are applied only when necessary. An example of such a use case involves implementing an intentless policy in a chatbot. Its primary objective is to enable the chatbot to effectively manage a diverse array of user queries, ensuring a valuable and engaging interaction even in scenarios where the user intent is implicit or uncertain.

Note that large LLM models are used as efficiently as possible to help you get the desired functionality at a lower cost. That means we can combine LLMs of different sizes or types to help you get the desired functionality at a lower cost.

Sasa co-founded Vega IT 15 years ago with his former university roommate Vladan. Their dream of founding an IT company has grown into the premier software company with more than 750 engineers in Serbia. If you prefer to send an email, feel free to reach out at hello.sasa@vegaitglobal.com.

Real people. Real pros.

Book a call today.Send us your contact details and a brief outline of what you might need, and we’ll be in touch within 12 hours.